artificial intelligence

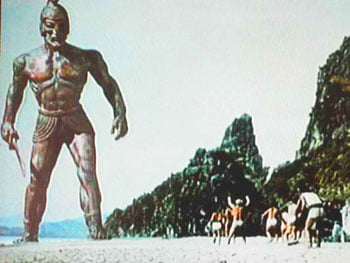

From ancient times man has dreamed of being able to give life to one of his creations. In Greek mythology, Hephaestus (Daedalus as well, I think?) constructed ‘robots’ (Talos, for example, Hephaestus’ gigantic bronze warrior of “Jason and the Argonauts” fame). It was not understood then how intelligence was going to be far more difficult to produce artificially than self-powered movement.

When I was at school, there was much talk about chess playing computers and how, once we had a device that could play chess brilliantly, then we would have artificial intelligence (AI). Of course, Kasparov, arguably the greatest human player of chess who ever lived, was beaten by IBM’s Deep Blue, but no one pretended it was possible to have a sensible conversation with IBM’s prodigy. It turned out, rather unsurprisingly in retrospect, that though for us it is difficult to play chess, for a computer with a fast enough processor it is a cinch. (Incidentally, as far as I’m aware, computers have not been able to beat master players of Go… and this only goes to show that, to play well, Go requires more of that elusive human intelligence, than does chess… and this again, to those who have played both, is not surprising.)

What was surprising was to discover that it was the things we find easy (recognizing our mother from different angles, under different lighting conditions, even if she’s wearing a disguise) that computers find almost impossible. Of course, the reason this was surprising was because we didn’t actually know what constitutes intelligence. We still don’t. If we did, then we would already have made a device mimicking it. Marvin Minsky has been making predictions for decades that, within a few years, there would be AIs around to whom we would appear imbeciles… Clearly, the man suffers from a delusion that he knows what intelligence is. He’s not alone.

Personally, though like so many others I have been fascinated by the idea of AIs most of my life, perhaps we should be careful what we wish for. No doubt a ‘real’ AI would be able to do everything we do INDEFATIGABLY – and there’s the rub. An AI could do everything we can do, but without needing to rest, without having moods, self-doubt. If we could make it, it could make a version of itself… and if it could do so, such that its creation was even infinitesimally more capable than it was, well, we would have started a new evolutionary line that would quickly out-evolve us… and then Dr Minsky would finally be right…

Surely the the key to ‘useful’ intelligence is the ability to ignore it – I’m sure an A.I. would be ‘brilliant’ at everything, but deciding when to act on its brilliance is the key – otherwise it would become bogged-down in an ever-expanding, branching train of thought, with infinite conclusions, each requiring a decision as to which thought should spark further action. Our skill, ironically, is when to lounge around and do nothing – to daydream, or simply prune a particular train of thought for no conscious reason… For me, intelligence is defined as much by what we can’t ‘do’ and how we cope with that as much as by how well we do execute tasks.

Defining the parameters of such an artificial intelligence is relatively easy to do, but it seems to me that this is merely describing its boundary and not its content. The content is, perhaps, one of the last great ‘black boxes’ left in science…

The latter part of what you say seems to me to be merely splitting the black box. I can’t see how consigning one half into the darkness – and what you’re describing here is the unconscious – gets any further forward. Except perhaps to say that, since we seem to need an unconscious to guide our consciousness, then an AI will similarly need one…

In order to understand Artificial Intelligence, we’ll have to study Artificial Stupidity…

hmmm… that sounds good, but what does it mean?